MOST scientists STILL can’t replicate or reproduce studies by their peers / How broken science REALLY is if even Trump picked up on this crisis?!

“If you look at all the published literature - not just the indexed articles on PubMed but everything that is published anywhere - probably 90% of it is not reproducible... And probably 20–30% of it is totally made up.” - Prof. Csaba Szabo, University of Fribourg

“It’s worrying because replication is supposed to be a hallmark of scientific integrity” - Dr Tim Errington, University of Virginia

This fundamental flaw is actually really old, possibly as old as science, but it first came to my attention via a 2017 BBC article that I’m reproducing a bit further below.

I sounded the alarm as loud as I could at the time, but people were still having it good at the time and they were captured by whatever psyops were hype that day on TV or on YouTube (the new cable TV), so I grabbed little attention for this. It might happen again, but this time I noticed the wave is way larger.

And the problem with bad science is that it creeps into the body of healthy science like cancer, so, if untreated, it gets exponentially worse.

Which seems to be exactly the case, fast forward to 2025, it looks like science is really ill and it doesn’t have a health insurance. A consistent bunch of articles and even books relight the torch that signals danger.

The best introduction to this topic I found in a science publication has originally appeared in The Conversation, known as occasional science worshipers rather than constant science followers:

Research replication can determine how well science is working – but how do scientists replicate studies?

by Amanda Kay Montoya, University of California / Modern Sciences, July 30 2025

Back in high school chemistry, I remember waiting with my bench partner for crystals to form on our stick in the cup of blue solution. Other groups around us jumped with joy when their crystals formed, but my group just waited. When the bell rang, everyone left but me. My teacher came over, picked up an unopened bag on the counter and told me, “Crystals can’t grow if the salt is not in the solution.”

To me, this was how science worked: What you expect to happen is clear and concrete. And if it doesn’t happen, you’ve done something wrong.

If only it were that simple.

It took me many years to realize that science is not just some series of activities where you know what will happen at the end. Instead, science is about discovering and generating new knowledge.

Now, I’m a psychologist studying how scientists do science. How do new methods and tools get adopted? How do changes happen in scientific fields, and what hinders changes in the way we do science?

One practice that has fascinated me for many years is replication research, where a research group tries to redo a previous study. Like with the crystals, getting the same result from different teams doesn’t always happen, and when you’re on the team whose crystals don’t grow, you don’t know if the study didn’t work because the theory is wrong, or whether you forgot to put the salt in the solution.

The replication crisis

A May 2025 executive order by President Donald Trump emphasized the “reproducibility crisis” in science. While replicability and reproducibility may sound similar, they’re distinct.

Reproducibility is the ability to use the same data and methods from a study and reproduce the result. In my editorial role at the journal Psychological Science, I conduct computational reproducibility checks where we take the reported data and check that all the results in the paper can be reproduced independently.

But we’re not running the study over again, or collecting new data. While reproducibility is important, research that is incorrect, fallible and sometimes harmful can still be reproducible.

By contrast, replication is when an independent team repeats the same process, including collecting new data, to see if they get the same results. When research replicates, the team can be more confident that the results are not a fluke or an error.

Reproducibility and replicability are both important, but have key differences. Open Economics Guide, CC BY

The “replication crisis,” a term coined in psychology in the early 2010s, has spread to many fields, including biology, economics, medicine and computer science. Failures to replicate high-profile studies concern many scientists in these fields.

Why replicate?

Replicability is a core scientific value: Researchers want to be able to find the same result again and again. Many important findings are not published until they are independently replicated.

In research, chance findings can occur. Imagine if one person flipped a coin 10 times and got two heads, then told the world that “coins have a 20% chance of coming up heads.” Even though this is an unlikely outcome – about 4% – it’s possible.

Replications can correct these chance outcomes, as well as scientific errors, to ensure science is self-correcting.

For example, in the search for the Higgs boson, two research centers at CERN, the European Council for Nuclear Research, ATLAS and CMS, independently replicated the detection of a particle with a large unique mass, leading to the 2013 Nobel Prize in physics.

The initial measurements from the two centers actually estimated the mass of the particle as slightly different. So while the two centers didn’t find identical results, the teams evaluated them and determined they were close enough. This variability is a natural part of the scientific process. Just because results are not identical does not mean they are not reliable.

Research centers like CERN have replication built into their process, but this is not feasible for all research. For projects that are relatively low cost, the original team will often replicate their work prior to publication – but doing so does not guarantee that an independent team could get the same results.

A graph showing time on the x axis and COVID-19 cases on the y axis. A line labeled ‘placebo group’ goes up from zero at a 45-degree angle, while the line labeled ‘vaccine group’ goes up slightly and then plateaus.

Because the results on vaccine efficacy were so clear, replication wasn’t necessary and would have slowed the process of getting the vaccine to people. XKCD, CC BY-NC

When projects are costly, urgent or time-specific, independently replicating them prior to disseminating results is often not feasible. Remember when people across the country were waiting for a COVID-19 vaccine?

The initial Pfizer-BioNTech COVID-19 vaccine took 13 months from the start of the trial to authorization from the Food and Drug Administration. The results of the initial study were so clear and convincing that a replication would have unnecessarily delayed getting the vaccine out to the public and slowing the spread of disease.

Since not every study can be replicated prior to publication, it’s important to conduct replications after studies are published. Replications help scientists understand how well research processes are working, identify errors and self-correct. So what’s the process of conducting a replication?

The replication process

Researchers could independently replicate the work of other teams, like at CERN. And that does happen. But when there are only two studies – the original and the replication – it’s hard to know what to do when they disagree. For that reason, large multigroup teams often conduct replications where they are all replicating the same study.

Alternatively, if the purpose is to estimate the replicability of a body of research – for example, cancer biology – each team might replicate a different study, and the focus is on the percentage of studies that replicate across many studies.

These large-scale replication projects have arisen around the world and include ManyLabs, ManyBabies, Psychological Accelerator and others.

Replicators start by learning as much as possible about how the original study was conducted. They can collect details about the study from reading the published paper, discussing the work with its original authors and consulting online materials.

The replicators want to know how the participants were recruited, how the data was collected and using what tools, and how the data was analyzed.

But sometimes, studies may leave out important details, like the questions participants were asked or the brand of equipment used. Replicators have to make these difficult decisions themselves, which can affect the outcome.

Replicators also often explicitly change details of the study. For example, many replication studies are conducted with larger samples – more participants – than the original study, to ensure the results are reliable.

Sadly, replication research is hard to publish: Only 3% of papers in psychology, less than 1% in education and 1.2% in marketing are replications.

If the original study replicates, journals may reject the paper because there is no “new insight.” If it doesn’t replicate, journals may reject the paper because they assume the replicators made a mistake – remember the salt crystals.

Because of these issues, replicators often use registration to strengthen their claims. A preregistration is a public document describing the plan for the study. It is time-stamped to before the study is conducted.

This type of document improves transparency by making changes in the plan detectable to reviewers. Registered reports take this a step further, where the research plan is subject to peer review before conducting the study.

If the journal approves the registration, they commit to publishing the results of the study regardless of the results. Registered reports are ideal for replication research because the reviewers don’t know the results when the journal commits to publishing the paper, and whether the study replicates or not won’t affect whether it gets published.

About 58% of registered reports in psychology are replication studies.

Replication research often uses the highest standards of research practice: large samples and registration. While not all replication research is required to use these practices, those that do contribute greatly to our confidence in scientific results.

Replication research is a useful thermometer to understand if scientific processes are working as intended. Active discussion of the replicability crisis, in both scientific and political spaces, suggests to many researchers that there is room for growth. While no field would expect a replication rate of 100%, new processes among scientists aim to improve the rates from those in the past.

Now here’s BBC mixing up reproductibility and replication, as a “top shelf authority source” should. Regardless, you can extract the useful info.

Most scientists ‘can’t replicate studies by their peers’

BBC, 22 February 2017

Scientists attempting to repeat findings reported in five landmark cancer studies confirmed only two

Science is facing a “reproducibility crisis” where more than two-thirds of researchers have tried and failed to reproduce another scientist’s experiments, research suggests.

This is frustrating clinicians and drug developers who want solid foundations of pre-clinical research to build upon.

From his lab at the University of Virginia’s Centre for Open Science, immunologist Dr Tim Errington runs The Reproducibility Project, which attempted to repeat the findings reported in five landmark cancer studies.

“The idea here is to take a bunch of experiments and to try and do the exact same thing to see if we can get the same results.”

You could be forgiven for thinking that should be easy. Experiments are supposed to be replicable.

The authors should have done it themselves before publication, and all you have to do is read the methods section in the paper and follow the instructions.

Sadly nothing, it seems, could be further from the truth.

After meticulous research involving painstaking attention to detail over several years (the project was launched in 2011), the team was able to confirm only two of the original studies’ findings.

Two more proved inconclusive and in the fifth, the team completely failed to replicate the result.

“It’s worrying because replication is supposed to be a hallmark of scientific integrity,” says Dr Errington.

Concern over the reliability of the results published in scientific literature has been growing for some time.

According to a survey published in the journal Nature last summer, more than 70% of researchers have tried and failed to reproduce another scientist’s experiments.

Marcus Munafo is one of them. Now professor of biological psychology at Bristol University, he almost gave up on a career in science when, as a PhD student, he failed to reproduce a textbook study on anxiety.

“I had a crisis of confidence. I thought maybe it’s me, maybe I didn’t run my study well, maybe I’m not cut out to be a scientist.”

The problem, it turned out, was not with Marcus Munafo’s science, but with the way the scientific literature had been “tidied up” to present a much clearer, more robust outcome.

“What we see in the published literature is a highly curated version of what’s actually happened,” he says.

“The trouble is that gives you a rose-tinted view of the evidence because the results that get published tend to be the most interesting, the most exciting, novel, eye-catching, unexpected results.

“What I think of as high-risk, high-return results.”

The reproducibility difficulties are not about fraud, according to Dame Ottoline Leyser, director of the Sainsbury Laboratory at the University of Cambridge.

That would be relatively easy to stamp out. Instead, she says: “It’s about a culture that promotes impact over substance, flashy findings over the dull, confirmatory work that most of science is about.”

She says it’s about the funding bodies that want to secure the biggest bang for their bucks, the peer review journals that vie to publish the most exciting breakthroughs, the institutes and universities that measure success in grants won and papers published and the ambition of the researchers themselves.

“Everyone has to take a share of the blame,” she argues. “The way the system is set up encourages less than optimal outcomes.”

For its part, the journal Nature is taking steps to address the problem.

It’s introduced a reproducibility checklist for submitting authors, designed to improve reliability and rigour.

“Replication is something scientists should be thinking about before they write the paper,” says Ritu Dhand, the editorial director at Nature.

“It is a big problem, but it’s something the journals can’t tackle on their own. It’s going to take a multi-pronged approach involving funders, the institutes, the journals and the researchers.”

But we need to be bolder, according to the Edinburgh neuroscientist Prof Malcolm Macleod.

“The issue of replication goes to the heart of the scientific process.”

Writing in the latest edition of Nature, he outlines a new approach to animal studies that calls for independent, statistically rigorous confirmation of a paper’s central hypothesis before publication.

“Without efforts to reproduce the findings of others, we don’t know if the facts out there actually represent what’s happening in biology or not.”

Without knowing whether the published scientific literature is built on solid foundations or sand, he argues, we’re wasting both time and money.

“It could be that we would be much further forward in terms of developing new cures and treatments. It’s a regrettable situation, but I’m afraid that’s the situation we find ourselves in.”

Almost a decade later:

Fascinating, but does it replicate? The reproducibility crisis is undermining scientific trust

Particle journal, 2025 / edited by Lisa Lock, reviewed by Robert Egan

Over the last few centuries, the scientific method has established itself as a pretty useful tool.

A key facet of scientific methodology is reproducibility.

Essentially, good science should be easily reproduced with the same methodology in a different setting.

Ten years ago, Nature published an article noting that more than half of psychological studies cannot be replicated.

It seems little has changed since then, with claims that sociology, economics, medicine and other fields are all experiencing reproducibility issues.

Dodgy dudes doing dodgy things

Science is littered with dodgy work that, despite heavy criticism, remains influential.

Take the Stanford Prison Experiment. A team of researchers randomly assigned volunteers as either prisoners or guards to study behavior in jails.

The study was canceled almost immediately after “prisoners” were abused by “guards.”

This was unreplicable for several reasons. First, “prisoners” were not protected from the “guards,” which cannot be allowed to happen again.

Second, the lead researcher, Phillip Zimbardo, was instructing the guards about how to act, undermining the experiment’s premise.

Scientists have nevertheless attempted to replicate something similar with inconsistent results.

Digital disinformation

Associate Professor Chris Kavanagh is a cultural anthropologist and psychologist at Rikkyo University.

He has been vocal about this issue, pointing out the pervasiveness of the replication crisis due to unregulated podcasts like The Huberman Lab and popular science YouTube channels such as TED.

The influential TED Talk “Your Body Language May Shape Who You Are” is guilty of this.

Social psychologist Dr. Amy Cuddy claims that altering your posture can change how you feel and forcing a smile can make you happier.

She reached this conclusion after measuring cortisol levels in people’s brains after they adopted a “power” pose for just minutes.

Supposedly, people who posed like a gorilla felt more confident and were less stressed.

The problem is that power poses aren’t so powerful under a rigorous scientific lens.

Scientists have struggled to replicate Cuddy’s research, but the TED Talk has amassed an impressive 27 million views.

Publish or perish

Chris argues there’s fault on both sides of the academic publishing industry.

“There’s a well-known issue called publication bias,” he says.

Publication bias is the propensity for academic journals to prioritize studies with positive, statistically significant results.

“Journals want to publish interesting results with novel findings, but science relies on negative results and things which aren’t really groundbreaking,” says Chris.

“They deserve a lot of the blame for the situation because a lot of the incentives were allowing questionable research practices.”

That said, researchers contribute to the problem as well.

“Academics are trained to respond to the incentives of the publishing industry,” says Chris.

He says students are told, “You’ve collected all this data and the results are negative, you don’t need to panic.”

“Go back to your data with an open mind … Control for different things and see if you can find a result.”

This kind of retrospective malpractice is known as p-hacking.

The p-value is a measure of how likely the observed outcome can be attributed to chance.

This p-hacking generally occurs when scientists reassess their hypothesis after the data has been collected due to the results being deemed boring or insignificant.

Just pre- better

In recent years, things appear to have improved due to organizations such as the Journal of Negative Results, a now-defunct journal dedicated to publishing studies with no significant outcomes.

Or there’s the Many Labs Project, where 51 researchers from 35 institutions tested the outcomes of significant studies using exactly the same methodology, often with results that the original researchers found less than satisfying.

Since the replication alarm bells rang a few years ago, a lot of the social sciences have cleaned up their act.

Chris suggests this is thanks to an increase in the number of pre-registered studies.

Pre-registration is the process of recording the methods and analysis of your study into an open-source database before the study has been conducted.

That way, you can’t skew the data afterwards to suit preconceived notions or biases from academic publishers

However, pre-registration isn’t a silver bullet.

“Very few people go and check pre-registrations. Very few people download datasets and analyze them,” says Chris.

“It’s not usually a prerequisite for publication.”

So while we have good reason to trust in the scientific process of criticism and debate, there are still plenty of gaps for crappy science to slip through.

The crisis is not marginal, it’s not circumventable, it grips the very engine of science. So much so that it drove a frustrated scientist to write a massive book about it.

‘Why is it that nobody can reproduce anybody else’s findings?’

Pharmacologist Csaba Szabo discusses his upcoming book, “Unreliable”

C&EN, February 27, 2025

Biomedical scientists around the world publish around 1 million new papers a year. But a staggering number of these cannot be replicated. Drastic action is needed now to clean up this mess, argues pharmacologist Csaba Szabo in Unreliable, his upcoming book on irreproducibility in the scientific literature.

...

“The things that we’ve tried are not working,” says Szabo, a professor at the University of Fribourg. “I’m trying to suggest something different.”

“Unreliable” is a disturbing but compelling exploration of the causes of irreproducibility - from hypercompetition and honest errors, to statistical shenanigans and outright fraud.

In the book, Szabo argues that there is no quick fix and that incremental efforts such as educational workshops and checklists have made little difference. He says the whole system has to be overhauled with new scientific training programs, different ways of allocating funding, novel publication systems, and more criminal charges for fraudsters.

“We need to figure out how to reduce the waste and make science more effective,” Szabo says.

This interview was edited for clarity and length.

Why did you write this book?

As most scientists, I go to scientific meetings and go out for beers with other scientists and this topic keeps coming up. “Why is it that nobody can reproduce anybody else’s findings?” we ask. This is not a new question. I was sitting around a table with some colleagues and collaborators in New York at the start of my sabbatical, and one of them said, “Somebody should write a book about this.” Another said to me, “You are on sabbatical,” and to be honest, I’d vaguely had this idea already.

It felt like everybody wanted this book to happen, but nobody had wanted to write it.

What were your biggest takeaways?

If you look at all the published literature - not just the indexed articles on PubMed but everything that is published anywhere - probably 90% of it is not reproducible. That was shocking even to me. And probably 20–30% of it is totally made up.

I didn’t expect the numbers to be that high going into this. It’s just absurd, but this is what I came to conclude.

Just think about all the money wasted. And paper mills [fraudulent organizations that write and publish fake research] make several billion dollars per year. This is a serious industry.

Another big shock was learning that the process of trying to do something about the reproducibility crisis—trying to clean up the literature, trying to identify bad actors—is not done by the establishment, by grant-giving bodies, by universities, by journals or by governments. Who is doing this? Private investigators, working on their free time at home, while worried about who is going to sue them.

What kind of a system is this?

You propose some radical solutions. Walk me through these.

This is an ecosystem. You need to reform the education, the granting process, the publication process, and everything else all at once.

If you start on the education side, what I propose is that there should be separate training tracks for “scientific discovery” and “scientific integrity.” People need to be trained in how to do experimental design, statistical analysis, data integrity, and independent refereeing of papers and grants—and this should be its own separate profession.

On the granting side, for the most part right now we work in a system where each scientist is viewed as a solo artist, and they submit individual grants to get funding. But in the end, big research institutions tend to keep getting the same amount of money each year. So why do all of this? Why not instead give each institution a lump sum of money for research and attach reproducibility and replication and scientific integrity requirements to the funds.

That one is really controversial. It would put the burden on the institution to figure out the best way to spend the money. It would require very smart institutional leadership, with long-term vision and the ability to identify quality science. It wouldn’t be easy. But you could over time get to see which institutions funded projects that were reproducible and translatable, and which ones didn’t.

A benefit of this approach is that people could spend less time writing grants and more time on research.

Then on the publication side, top journals could start to prioritize submissions that come with replication supplements. Once a scientist has made a new discovery, they would ask an outside laboratory to try to reproduce it, and then attach that as a supplemental submission to give a higher level of confidence in their work. I think this is actually a good idea and not that hard to implement.

You also want to shrink the scientific workforce, and you advocate for cameras and keystroke monitors in labs. How do you think these will go down with the research community?

There are cameras in cockpits and cameras behind the barista in Starbucks. There is a lot of money at stake here, too, and eventually there are human lives at stake here. Why is it so outrageous then to say that somebody has to try to control it?

There are so many things we could do. I’m not saying that my ideas are brilliant. I’d love to see more out-of-the-box ideas. If somebody else comes up with better ideas, God bless them; let them implement it. This is just a starting point.

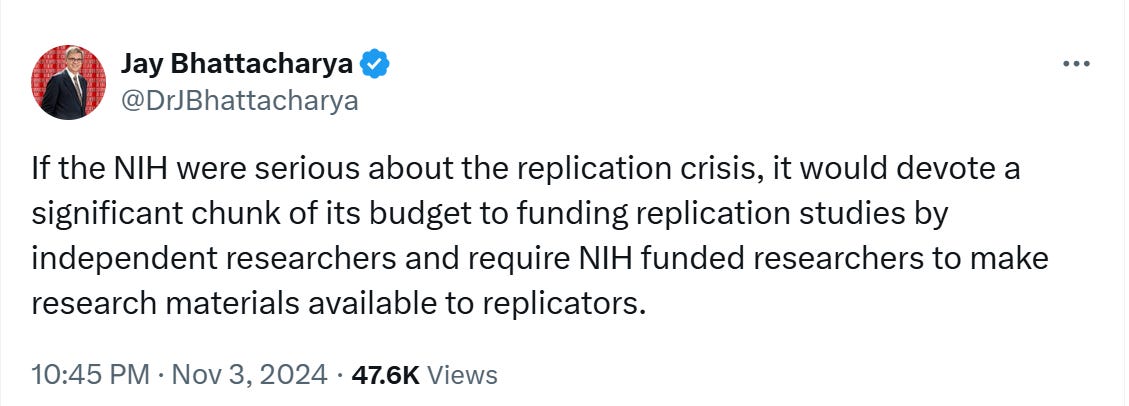

Stanford’s Jay Bhattacharya, who has been tapped to lead the National Institutes of Health, has posted on X that increased funding for replication studies could help address irreproducibility. The research community is concerned about the future of the NIH under President Trump, but could there be a chance his NIH pick will prioritize replication efforts?

Amen to that tweet.

I don’t know Jay, but if he gets the job, I don’t think he wants to go down in history as the person who destroyed American biomedical science. He wants to go down in history as the person who reformed American biomedical science. Maybe I am still naive and hope for too much from our politicians or governments, but this reform has to come from the top, from whoever holds and controls the money. The American system, and the NIH in particular, has a lot of influence. They have more power than even they perhaps realize, if they really wanted to do something.

But Jay is not yet the head of the NIH. The day-to-day events that are happening, such as the stopping of meetings and purchases, feel punitive. It does not feel like they are part of some improvement master plan.

You punctuated the book with dozens of funny, dark cartoons. Which of these is your favorite?

I went through so many cartoons, and that was so much fun. They have this biting humor. Honestly, some of those cartoons are even more offensive than anything I say with words. They hammer down some points.

I think one of the most offensive ones is the scientists at the “publication workshop” [four scientists in a restaurant, ordering up a paper on herbal nanoparticles in cancer cells for publication in a journal with good impact factor]. Another one is of a recruitment committee looking at three candidates for a job. One is a plagiarist, one is a cheat, and the third is a sexual harasser, and they decide to go with the one with the most grant money. But it’s not a joke—I have seen this kind of thing.

I rest my case right here, it’s a good place for a case to rest.